Understanding the Neural Tangent Kernel

My attempt at distilling the ideas behind the neural tangent kernel that is making waves in recent theoretical deep learning research.

A blog about machine learning and math.

My attempt at distilling the ideas behind the neural tangent kernel that is making waves in recent theoretical deep learning research.

The factor graph is a beautiful tool for visualizating complex matrix operations and understanding tensor networks, as well as proving seemingly complicated properties through simple visual proofs.

I experiment with Neural ODEs and touch on parallels between adversarial robustness and equilibria of dynamical systems.

I train a character level decoder RNN to generate words, conditioned on a word embedding which represents the meaning of the word.

I train autoencoders to identify components of doodles using a synthetic dataset, and use them to create nifty animations by interpolating in latent space.

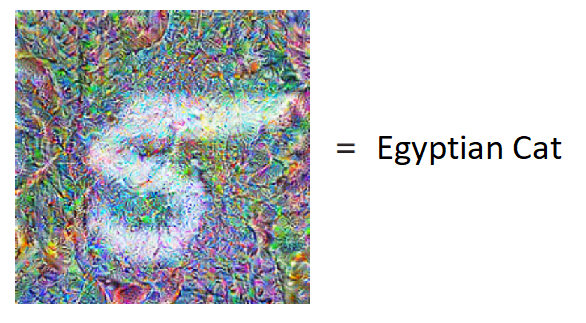

Google brain recently published a paper titled Adversarial Reprogramming of Neural Networks which caught my attention. I explore the ideas of the paper and perform some of my own experiments in this post.